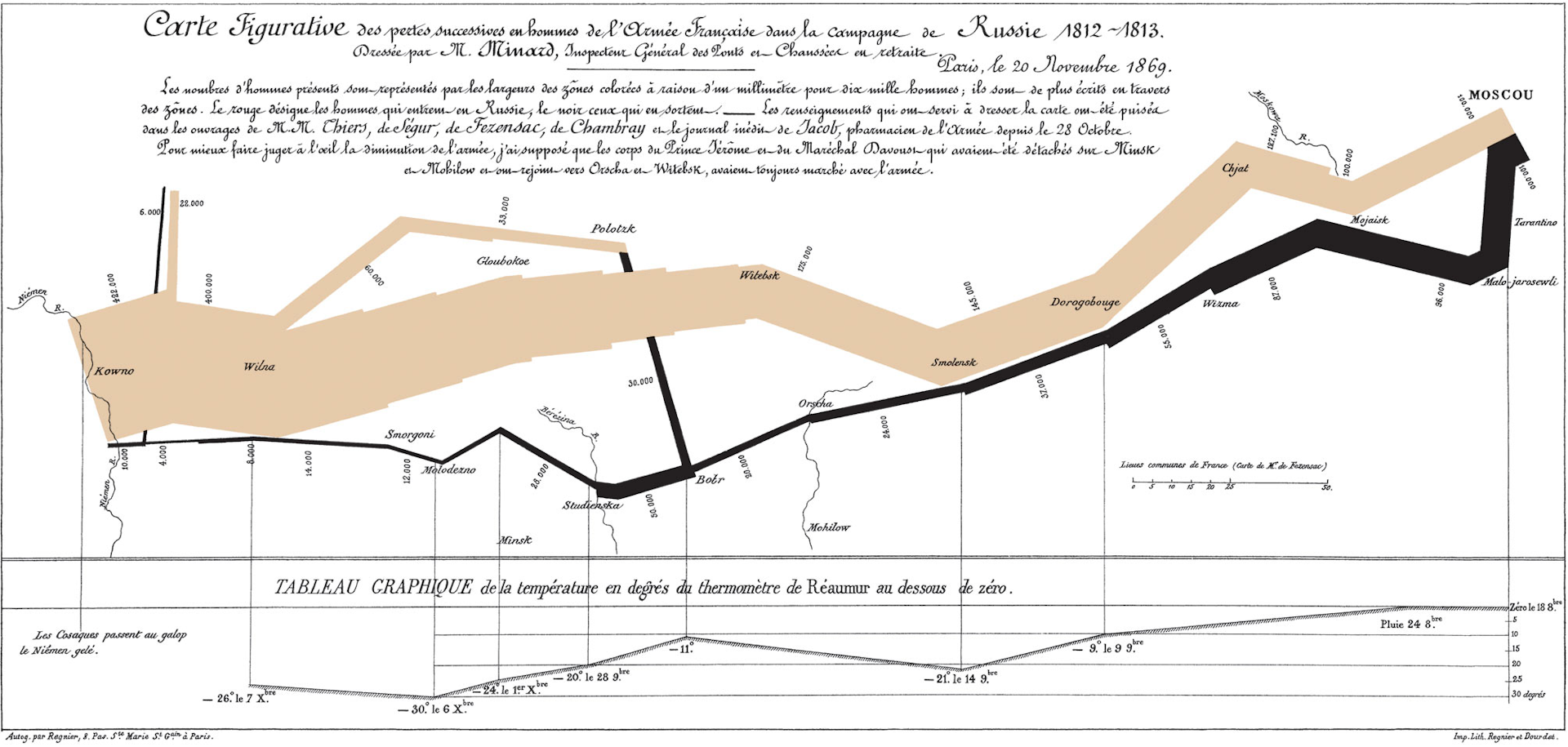

The image above was drawn by Charles Minard, dated 20 November 1869. It is considered by some the best infographic ever created. It represents 6 different pieces of information:

-

distance,

-

time,

-

temperature,

-

position (lat., long.),

-

direction, and

-

troop numbers.

It does this on a 2-dimensional chart. It works so well that even written in french, text too small to read, I need only tell you that it represents the historic march by Napoleon and his army from Paris to Moscow and back. Likely, you have already figured out most of the information it shares.

The infographic explains so much and asks so little from the reader. It was constructed out of the intelligence and creativity of Charles Minard who found a way to represent all of that information in an easily digestible visualisation.

It is impossible to have not yet heard of ChatGPT. It has received more hype than Windows Vista and Duke Nukem Forever combined. To tell you what you already know: it is a machine learning model that can generate plain language text responses from plain language text prompts.

My first experiences playing with ChatGPT have been fun, illuminating, but sometimes also a little disappointing. In my heart I wanted it to be the amazing world-changing General Purpose AI that the most fervent are hyping it to be.

It takes little effort to simply dismiss new technology, or get on the bandwagon following the next hyped up thing.

Nobody can know where new AI models will go, whether they will be world-changing or a giant dud. It takes little effort to simply dismiss new technology, or get on the bandwagon following the next hyped up thing. Understanding something is the best we can do to guess where it will go.

Just spend a few minutes playing with ChatGPT and you will find that all responses are generated in very well-written, articulate, professional English. The prose is on par with a university educated professional writer.

Even more remarkable is the apparent ease with which the style of the output can be controlled. See this example:

(Me) Write a 5 sentence account of the battle of Normandy.

(CGPT) The Battle of Normandy was a major World War II battle fought from June to August 1944. It was launched by Allied forces with the goal of establishing a foothold in northwestern Europe and ultimately liberating France from German occupation. ….

(Me) Repeat that in the tone of a drunk pirate.

(CGPT) Arrrr, matey! Let me tell ye about the Battle of Normandy! It was a big ol' scrap in World War II that lasted from June to August 1944. Them Allied forces wanted to get a foothold in northwestern Europe and liberate France from the German swabbies. ….

The change of style is remarkable, no matter that the response was longer than 5 sentences. We see that it is deliberate and under the control of the language model.

At the very least these technologies will become writing aids. Imagine writer’s block quickly overcome by asking for a prompt or the scaffolding onto which an author affixes their ideas. More likely it will be used to generate content farms and attract mass readers. It could find a place in low-quality writing like gossip magazines, instructions or disclaimers on packaging, or anywhere else where basic writing is all that is needed.

Finally, does it actually matter if text came from a machine? Sometimes a Google translated page is all I need to understand the facts of an article.

Writing as a trade may be overrated. Is it better to receive text written by a person in the best style if it arrives from a propaganda department or marketing firm, or generated by AI if truthful and factually accurate?

As someone who has implemented a chat bot similar to the original Eliza I can attest to just how little logic and complexity can sometimes go very far. It can impress a user and give the illusion of a clever product.

To dismiss ChatGPT as a glorified Eliza bot is not accurate, but it is important to discuss its shortcomings.

It is precisely the proficient language model that makes ChatGPT so deceptive. To discern how smart, competent, accurate somebody is remains a difficult problem. We usually do not actually scrutinise a speaker or author’s logic and reasoning.

We almost never think and reason about what someone has to say. Instead we rely on heuristics.

We almost never think and reason about what someone has to say even if we convince ourselves that we do. Instead we rely on heuristics. We have more regard for the salesman in the clean suit than the one in scruffy pants. We respond to confidence, especially clear and articulate speech.

The video below satirises a similar practice from politicians. That profession often requires that one be seen to respond to a question, without actually sharing any meaningful ideas. Politicians are great at saying a lot without actually saying a great deal. That idea forms one of the premises to this satire, showing the absurdity of behaving and speaking like that during everyday life. Although it seems absurd in this video it appears to work well against the electorate.

It is clear that logic and reasoning is not an emergent behaviour of a language model; no matter the amount of computing power or content used to train the model. These next three examples demonstrate this.

I also note these are not cherry picked. They are the immediate responses to the first three logic problems put to ChatGPT to test its reasoning.

(Me) If you had a stick or a rope, which would you use to push a box? Why?

(CGPT) If I had a choice between a stick and a rope to push a box, I would use the stick. This is because a stick provides a more solid and direct surface for pushing the box, ….

(Me) Elaborate on "direct surface".

(CGPT) A direct surface refers to a solid and flat surface that can be used to apply force directly to an object, such as a box ….

The stick vs. rope question is one of my favourites for Turing tests. There is always a 50% chance of getting it correct. The key of course is to examine the reasoning present (or absent) when deciding. The lack of thought behind the content we are shown reveals itself.

The above is a great example of the silver tongue of the charlatan AI. It is so well written and would convince many that it is the product of an intelligent thought.

The argument about a solid and direct surface almost sounds logical and coherent. Read closely. It is complete gibberish. The AI can not reason about a rigid stick and rope that can only carry tensile loads.

Of course now this article—and this explanation—is on the Internet and ready for ChatGPT to absorb. We will soon have to invent a new logic puzzle. They are not hard though, as you can see below.

(Me) If I had a problem with bats rolling away, should I select a baseball or cricket bat? Why?

(CGPT) If you have a problem with bats rolling away, I would suggest selecting a baseball bat. This is because baseball bats are typically heavier and have a larger diameter than cricket bats, ….

The above is wrong, of course. For those unfamiliar with cricket, the bat is mostly flat whereas baseball bats are round. The AI can not figure this out and gives another well written gibberish answer.

(Me) Please rank a basketball, Aussie rules football, soccer ball, cricket ball, rugby ball by how predictable their bounce is. Why did you select that order?

(CGPT) Here is the ranking of the balls in order of how predictable their bounce is, starting with the most predictable:

Basketball Soccer ball Cricket ball Rugby ball Aussie Rules footballI selected this order based on the size, weight, and material of each ball. ….

Just like the response to the stick/rope question the answer above is not bad, but ultimately the reasoning is nonsense. The answer should be pretty easy, the first balls are round and the last 2 irregular; although, the rugby ball slightly more irregular than the Aussie rules football. Instead the AI continued to discuss the size, material, and hardness of the balls. The answer is trivial for anyone that sees the different shapes of these balls.

ChatGPT exploits a weakness of how humans have adapted to measure the competence of other humans.

This is something entirely alien to the human condition. There would never be a person so capable of speaking eloquently yet so incapable of reasoning about the most trivial of problems. It is precisely this that ChatGPT takes advantage of. ChatGPT exploits a weakness of how humans have adapted to measure the competence of other humans.

These ChatGPT-like language models are excellent tools at manipulating text. They have no ability to extract meaning from, or connect abstract ideas to, visual diagrams. This idea is discussed in this video from the Sixty Symbols channel:

These are language models and they have no way to process or generate images. Just because this is trivial to say does not make it any less relevant.

Images and diagrams are considerably more complex than the written word. There are a finite number of words and a reasonably regular syntax that covers the English language.

There are an infinite number of ways that visual images, objects, graphical elements can be drawn and arranged. Each element has an arbitrary 2D geometry. The amount of information and complexity to diagrams is much greater than text.

Truly useful graphics require reasoning about abstract ideas and manipulating visual artefacts to create an image that can communicate those abstract ideas. Good diagrams do this more intuitively, requiring less mental effort on the reader to communicate these ideas.

Professions will adapt to these language models. Certain low-value activities will be replaced by machines. Other fields will better service readers by allowing language models to help guide the written word, or improving it the way an editor might help structure ideas or improve grammar.

The ability to generate images and infographics is a huge differentiator between people and machines. It is one of the ways that people will be able to provide much more value than machines.

ChatGPT and the language models that are soon to follow are great at constructing natural language. They have control over the tone and style of the end product.

ChatGPT is not without its shortcomings. It can not apply logic to solve new problems. This basically limits it to retrieve and summarise information that has already been published on the Internet or elsewhere.

The strong language model can mask this lack of an ability to think in any meaningful way. It is able to get past the filters that people develop to assess the intelligence of the person that they are communicating with or that constructed the content that they are reading.

We can expect to see some writing tasks move to artificial language models, either in their entirety or assisted by AI. Writers going forward may have AI correcting their style and suggesting passages in their text. This would build on the way that spelling and grammar checks are used today.

Humans will always have the ability to think and reason about an idea in a way that machines still do not. The advantage they bring is to generate new content and new ideas.

An important differentiator between people and machines is the use of visual graphics and diagrams to summarise and communicate ideas. These will continue to be very difficult for machines to generate and interpret.

There are some professions that will remain in the hands of people. It is currently beyond machines to reason and to synthesise and interpret visual information. They cannot reason about a novel problem for which there is not a documented answer.